Some time last fall, Amazon launched a service called Mechanical Turk. The name comes from a well-known illusion:

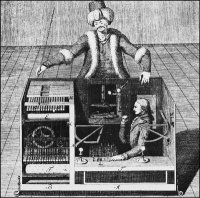

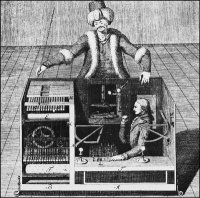

In 1769, Hungarian nobleman Wolfgang von Kempelen astonished Europe by building a mechanical chess-playing automaton that defeated nearly every opponent it faced. A life-sized wooden mannequin, adorned with a fur-trimmed robe and a turban, Kempelens “Turk” was seated behind a cabinet and toured Europe confounding such brilliant challengers as Benjamin Franklin and Napoleon Bonaparte. To persuade skeptical audiences, Kempelen would slide open the cabinets doors to reveal the intricate set of gears, cogs and springs that powered his invention. He convinced them that he had built a machine that made decisions using artificial intelligence. What they did not know was the secret behind the Mechanical Turk: a human chess master cleverly concealed inside. (From What is Amazon Mechanical Turk?)

The idea behind Mechanical Turk is simple. It’s artificial, artificial intelligence. If you recruit human beings to do tasks that would otherwise require expensive software development it is more efficient and you ensure greater accuracy in the result. Simple tasks like image recognition can be performed easily by human beings and not at all easily by image recognition software. Here’s how it works: on the mturk site there is a listing of available HITs. A HIT is any task that needs to be performed whether it be writing descriptions, adding metadata, or image recognition and matching. Normally, the easier the HIT, the less the reward there will be for completing it. The most common types of HITs involve matching street-level photographs with their corresponding address. These normally have a reward of $.03 for the completion of each HIT. The most likely goal of this type of task is to help improve the accuracy of results for Amazon’s street-level mapping program through A9. Since Amazon has a long way to go to document every street in every city in every country in the world, these types of HIT’s will probably remain the most common. It’s a huge endeavor since the photographic data for these results will also need to be updated as businesses relocate from time to time.

When the mturk service first launched, I clicked through about 2,000 sets of street address images with an accuracy rating of 85% (according to Amazon) in a week’s time. Completing image HIT’s is the kind of thing you can do while on the phone or surfing the internet. Just leave the Window up and chew through 20 or 30 at a time. Since you’re looking at street level photos of cities like Portland and San Francisco it’s almost like taking a weird, boring vacation. Judging from the hundreds of photos I clicked through, Philadelphia looks like an interesting place to visit.

When the mturk service first launched, I clicked through about 2,000 sets of street address images with an accuracy rating of 85% (according to Amazon) in a week’s time. Completing image HIT’s is the kind of thing you can do while on the phone or surfing the internet. Just leave the Window up and chew through 20 or 30 at a time. Since you’re looking at street level photos of cities like Portland and San Francisco it’s almost like taking a weird, boring vacation. Judging from the hundreds of photos I clicked through, Philadelphia looks like an interesting place to visit.

For each successful HIT completion, 85% of that 2,000, I got three pennies deposited in my Amazon account for a total of around $50. Once the HIT’s are completed and passed to your account, you are free to transfer the funds to your bank account. It’s a horribly boring and monotonous way to make money, but it works pretty well. After that first week as mturk became more well-known, the easy HIT’s dried up as human click bots around the world vied for the easy pennies. Now it’s so competitive (three cents is a lot of money to people in the Third World) that there’s no point in even bothering with it. You’re unlikely to get the large pools of easy HIT’s that were once available. It is, however, an amazing experiment in piecing out tasks to the global masses that no employee is going to want to spend their day doing.

Here is the coolest part. Amazon is now making this service available to other companies and individuals through their Requester program. This allows anyone to offer tasks to the mturk masses. Need some cheap market research? Send it to mturk and have your survey completed for $.01 a head. That’s 1,000 survey results for ten bucks. Other companies are paying for podcast transcription services at cut rate prices of $5-$10 per podcast. Transcription is an obvious winner for this type of service, but other services could include translation, editing / proofreading, OCR / handwriting, and content analysis. There are built-in mechanisms to ensure quality results. Before you can volunteer for more advanced jobs like transcription, you are often required to take a qualification test, which is prepared by the Requester. The other mechanism that ensures quality is simple, if the Requester is not satisfied with the result, they do not have to accept and pay for it. Companies like Hit Builder are springing up to build software and services for Requesters to streamline the process of using mturk. This is an idea that could really take off. As the broker between its army of mechanical Turks and companies who need human capital, Amazon could stand to make some serious money, but only if more companies start using it.

It was announced last week that the

It was announced last week that the  The San Francisco Chronicle ran

The San Francisco Chronicle ran  When the mturk service first launched, I clicked through about 2,000 sets of street address images with an accuracy rating of 85% (according to Amazon) in a week’s time. Completing image HIT’s is the kind of thing you can do while on the phone or surfing the internet. Just leave the Window up and chew through 20 or 30 at a time. Since you’re looking at street level photos of cities like Portland and San Francisco it’s almost like taking a weird, boring vacation. Judging from the hundreds of photos I clicked through, Philadelphia looks like an interesting place to visit.

When the mturk service first launched, I clicked through about 2,000 sets of street address images with an accuracy rating of 85% (according to Amazon) in a week’s time. Completing image HIT’s is the kind of thing you can do while on the phone or surfing the internet. Just leave the Window up and chew through 20 or 30 at a time. Since you’re looking at street level photos of cities like Portland and San Francisco it’s almost like taking a weird, boring vacation. Judging from the hundreds of photos I clicked through, Philadelphia looks like an interesting place to visit.